Zero-shot, Few-shot and CoT: what does it all mean?

In March 2023, a job posting by Anthropic shook the internet for weeks. The listing was for a Prompt Engineer position, with a salary of up to $335,000 per year.

The salary, the title, and the fact that a PhD wasn’t required sparked tons of discussion and memes. After all, many thought the job was just about “talking to ChatGPT.”

But if it’s not that… what is a Prompt Engineer?

And to start from the basics: what is a prompt?

What is a Prompt?

A prompt is like a script for the LLM (Large Language Model) you're interacting with.

You can give a simple instruction like:

"Give me a cake recipe"

Or add a constraint:

"but the recipe must not include carrots"

The more thoughtful and refined the prompt, the better and more consistent the model’s response will be.

"But I already know how to talk to ChatGPT… why learn Prompt Engineering?"

Good question! Here are a few reasons:

1. Fewer retries

Ever found yourself rewriting your prompt several times to get a decent output?

"Nope, it’s badly formatted, format it better"

"The code looks messy, clean it up"

If that feels familiar, it’s time to dive into prompt engineering.

Even if it takes longer to write at first, getting it right the first time saves you the frustration of trial and error.

2. More control and precision

Have you ever asked for an informal tone and got a robotic reply?

Or wanted a friendly response and got something awkward instead?

When you clearly define format, tone, and style, you get much closer to what you actually want.

3. Bias mitigation

If you're building AI-powered products, prompts can also act as guardrails, limiting what the model can or can’t say.

This helps avoid inappropriate answers, data leaks, or policy violations.

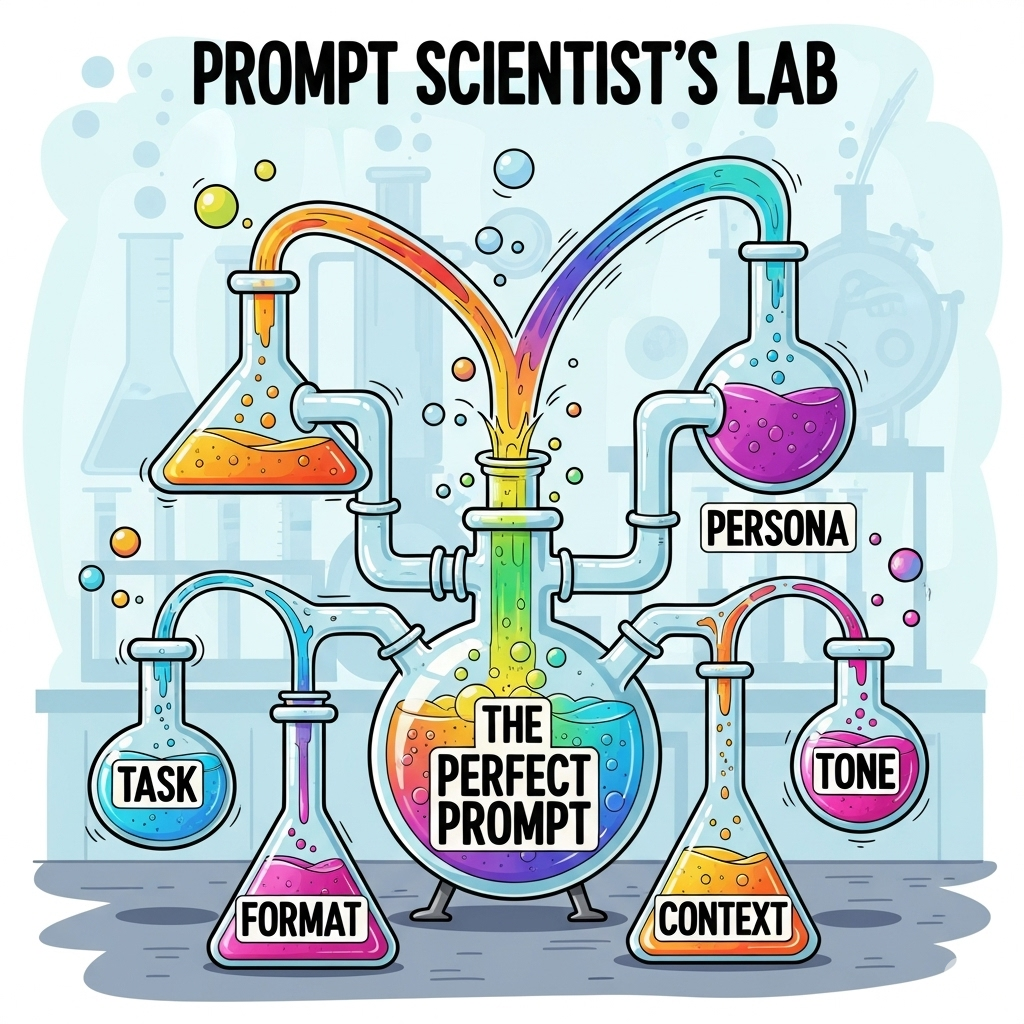

Anatomy of a good prompt

Like a good recipe, prompts also have essential components:

- Task: the action to be performed → Write, Summarize, Classify, Translate, Generate...

- Persona: the role the model should take → "You are an award-winning comedy screenwriter"

- Format: structure and style → "JSON format", "Summary in under 200 words", "3-column table"

- Context and Examples: help the model understand the task better (we’ll see this next)

- Tone: defines the language type → formal and academic, casual and friendly, empathetic, etc.

Creating a prompt is an iterative process: start simple, then evolve with more specificity, context, and examples until you get the result you want.

Prompting Techniques

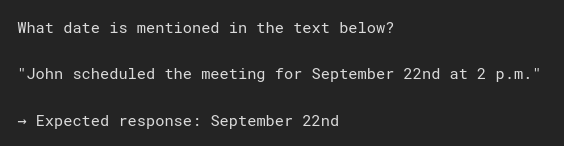

1. Zero-Shot Prompting

The simplest method: you give the task directly with no examples.

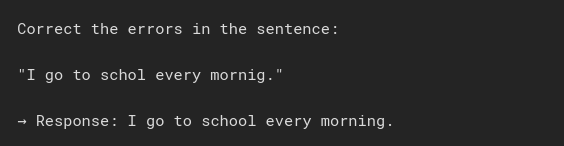

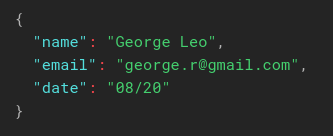

Example 1 – Data extraction

Example 2 – Spelling correction

Pros: quick, clean, simple

Limitations: may struggle with complex tasks

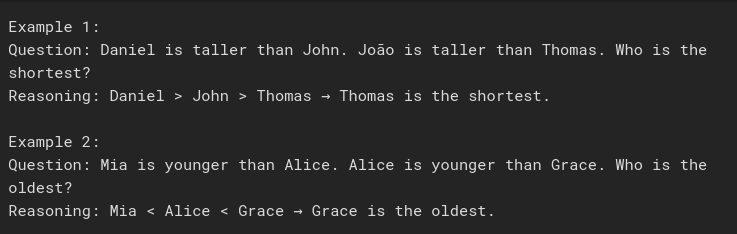

2. Few-Shot Prompting

You provide input + output examples to guide the model.

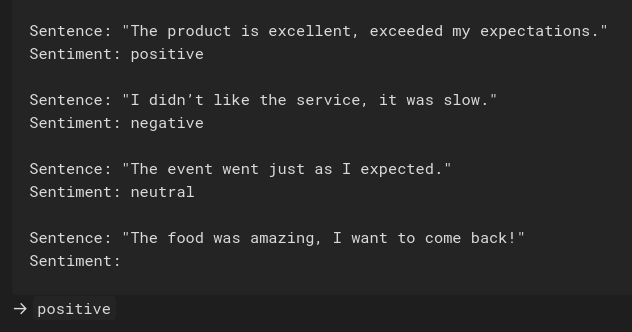

Example 1 – Sentiment classification

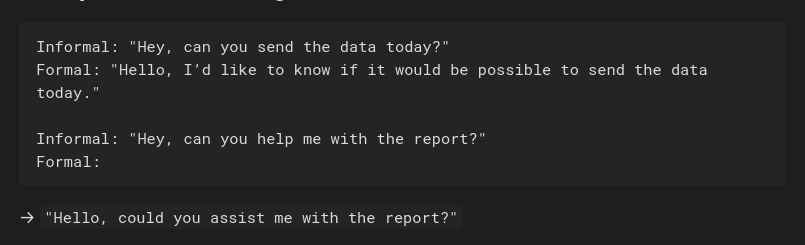

Example 2 – Formalizing emails

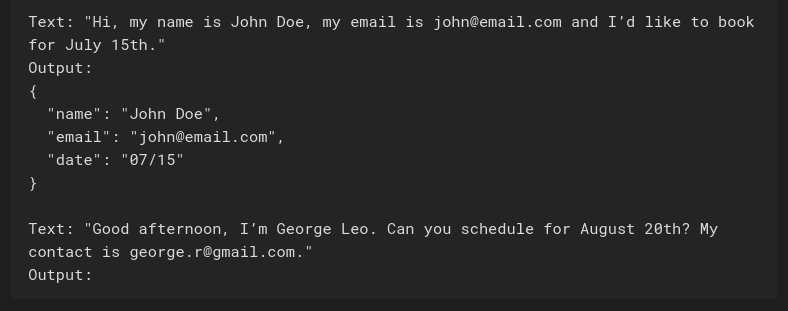

Example 3 – Structured extraction

Pros: more accurate, better for nuanced tasks

Cons: requires good examples and setup

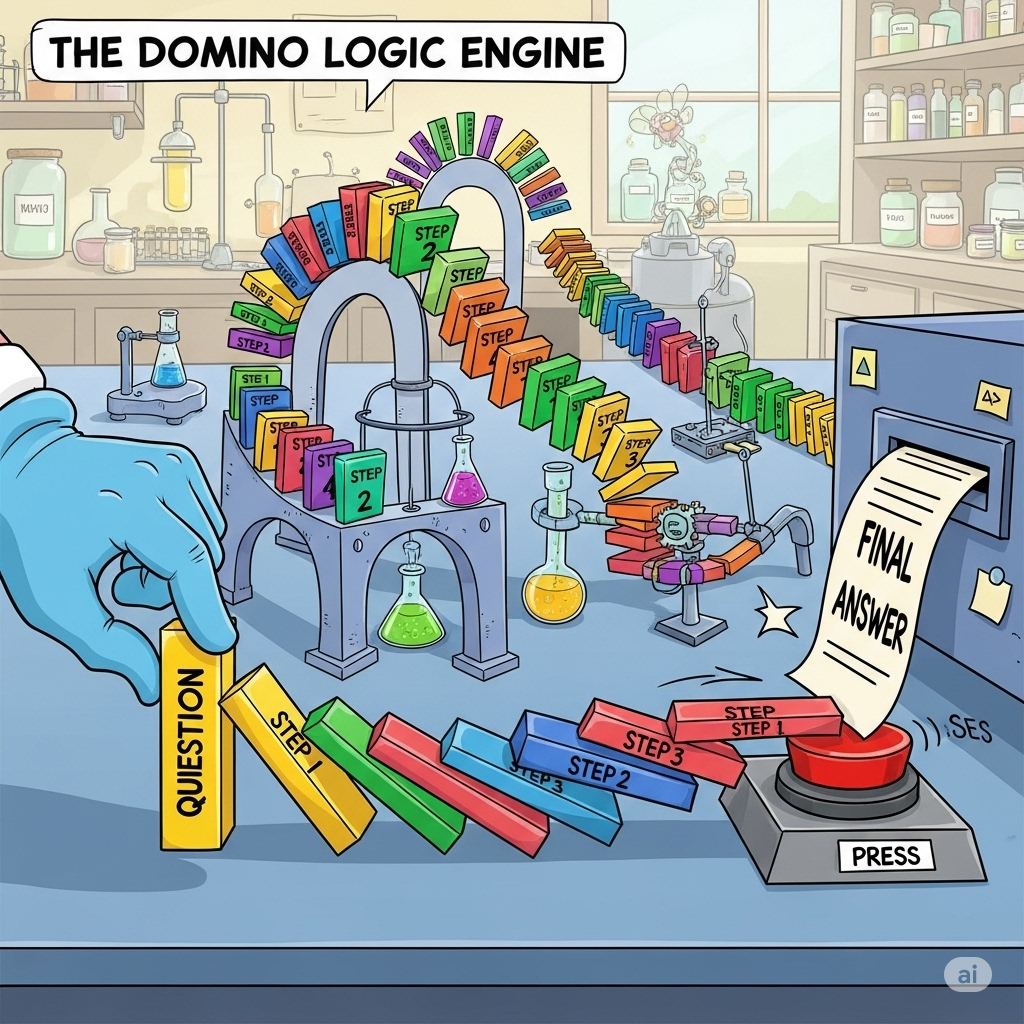

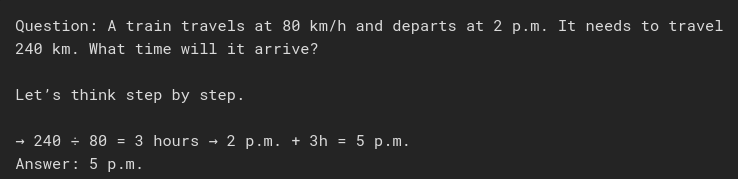

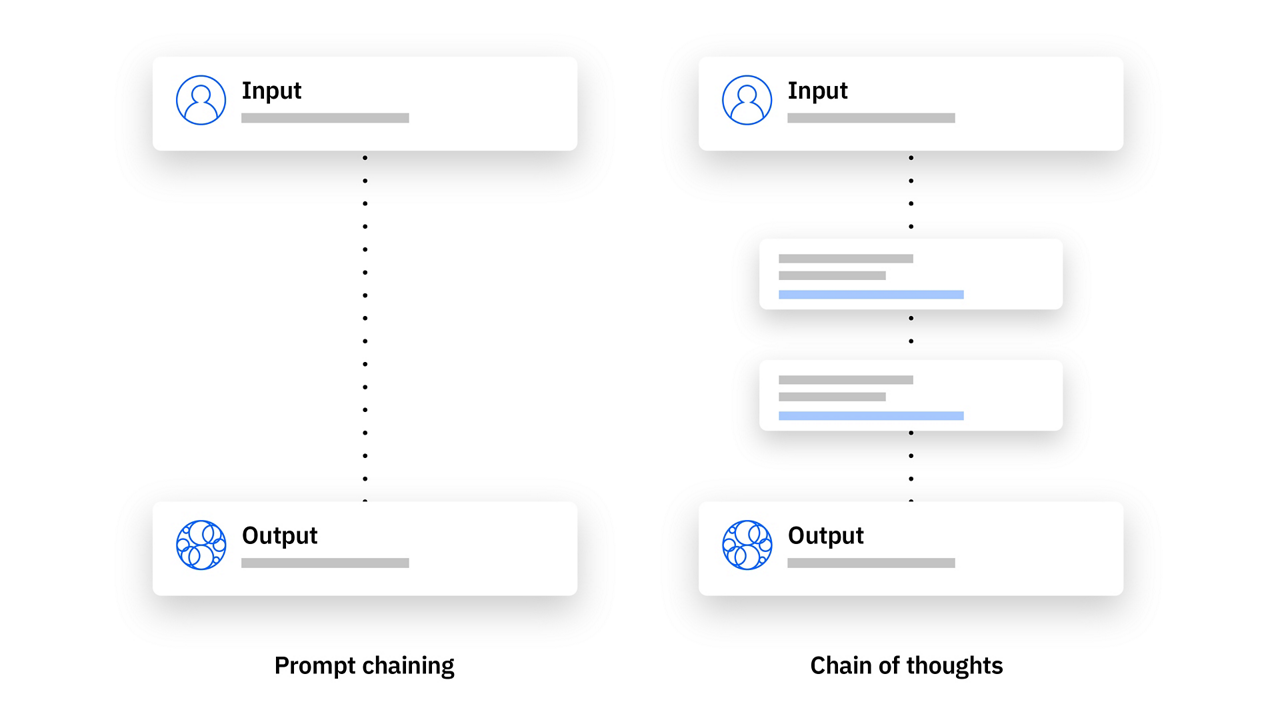

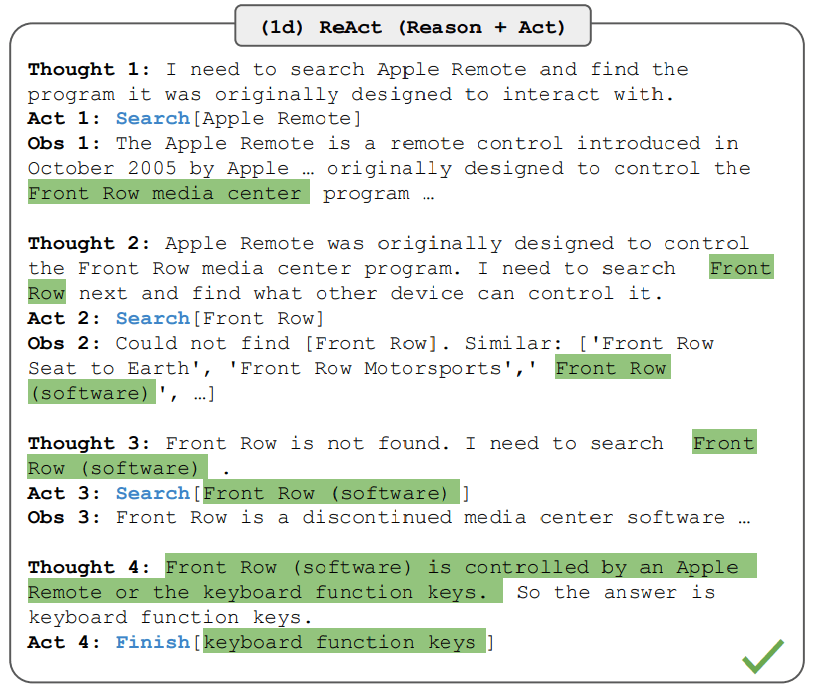

3. Chain-of-Thought Prompting (CoT)

Introduced in the 2022 paper "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models" by Google Research.

This technique asks the model to think step by step, like “thinking out loud.”

You can apply it as:

- Zero-Shot CoT → "Let’s think step by step"

- Few-Shot CoT → Examples with reasoning

Chain-of-Thought Examples

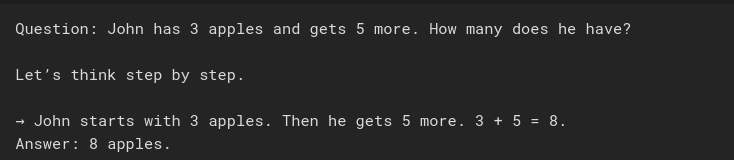

Example 1 – Basic math (zero-shot CoT)

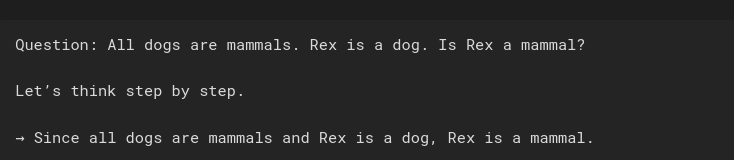

Example 2 – Deductive logic

Example 3 – Time arithmetic

Example 4 – Comparative reasoning (few-shot CoT)

Conclusion

Prompt Engineering is not just “talking nicely to AI.”

It’s a strategic tool for improving quality, reliability, and control when using language models.

And as we’ve seen, you can apply it with simple or advanced techniques depending on your goal.

Whether for personal use or professional projects, mastering prompt creation puts you several steps ahead in the world of generative AI.

Further reading

Member discussion