From Chaos to Chains - How LangChain Simplifies LLM App Development

When we talk about LLMs, in my opinion, the most impactful project in recent times is without a doubt OpenAI. There’s no way around it, the revolution that ChatGPT brought to the world, for both technical and non-technical people, is something we simply can’t go back from.

But coming in second place, again just my opinion, is the LangChain project, which arrived with the goal of simplifying a bunch of things that used to be really hard to do, and making them super easy.

Creating an AI agent doesn’t just require access to an LLM, it also involves orchestrating multiple components, managing state and memory, connecting with external data sources, both structured and unstructured, evaluating performance, and, finally, having a reliable deployment process. That’s exactly what the LangChain ecosystem aims to solve.

The first product, also called LangChain, came with a clear focus on developers, helping them build applications faster. Later on, the focus shifted a bit, the goal became ensuring that these apps work well, are reliable and transparent. That’s where LangSmith (for observability and evaluation) and LangServe (for deployment) came in.

In this article, I’ll try to give an overview of the first product, LangChain, what it was created to solve, its advantages and disadvantages

LangChain

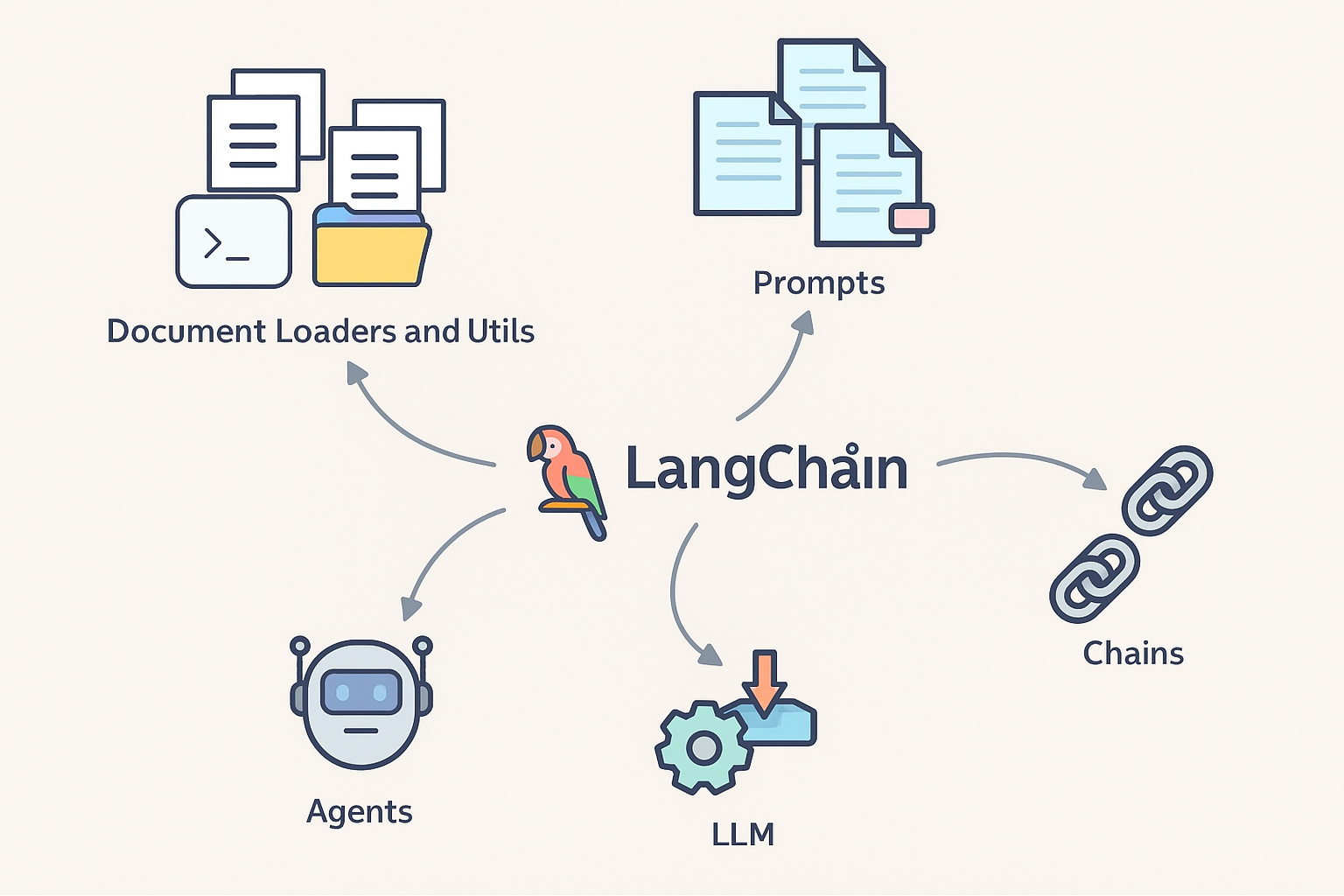

LangChain’s first product shares its name with the ecosystem. It’s a framework for Python and JavaScript, designed to make it easier to work with LLMs, build RAGs, integrate with vector databases, connect to external APIs, and manage memory.

Ever tried to integrate your agent’s data with an external system like Google Drive, Notion, or Discord and immediately felt the headache coming? LangChain has built-in tools that make these integrations super easy. And it doesn’t stop there, imagine the pain of switching your entire application from something like OpenAI’s API to another provider like Google or Claude. LangChain was built to help with that too. With it, you can build your code and change providers with just a few small tweaks.

Getting data from multiple sources using Document Loaders has never been easier, you can pull data from popular services like Dropbox, Google Drive, YouTube, Notion, MongoDB, and many more.

This means developers don’t have to stress about integrations and can focus instead on building actual features and delivering a great user experience.

LangChain works through chains.

Simply put:

A chain is an execution flow made up of one or more components, like:

- a language model (LLM),

- a tool, like a search engine, calculator, or database,

- an output parser, to extract structured data,

- a custom prompt,

- and so on.

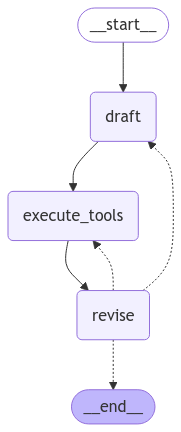

Even though LangChain has a lot of advantages, because it’s primarily based on sequential chains, it can be a bit limited in some scenarios.

For example, when you need to handle agents with multiple states or non-linear flows.

In applications where the agent needs to revisit steps, execute loops, or coordinate multiple agents that don’t work in a strictly sequential way, the linear structure of chains might not be the best fit.

So, for simpler agents with a linear flow, prototypes, or use cases that don’t require robust state management, LangChain is perfect for the job.

And just to give you a spoiler for the next article, in cases where you need feedback loops, interdependent states, a human-in-the-loop as part of the workflow, or coordination between multiple autonomous components, LangGraph is the tool that really shines.

With its graph-based model, LangGraph was specifically designed to give developers the control and abstraction needed to model and manage that kind of complexity.

Wrapping Up

LangChain has been a game-changer for anyone working with LLMs, making it super easy to build AI agents, integrate with tons of services, and even switch between model providers. While it shines for more straightforward projects, for those more complex ones with back-and-forth flows, LangGraph is already on the horizon as the next big thing.

Further reading

Member discussion